HOME >> News >> Intel’s AI chip business hits $1 billion a year, with target of $10 billion by 2022

Intel’s AI chip business hits $1 billion a year, with target of $10 billion by 2022

Published:2018-10-24 Views:4853 Publisher:www.wiseleed.com

Intel has sold more than 220 million Xeon processors over the past 20 years, generating $130 billion in revenues. But the latest $1 billion — generated by sales for artificial intelligence applications — may be the most important.

Navin Shenoy, executive vice president at Intel, said at an Intel event that Intel’s AI chip business is strategically important for the company as it shifts to becoming a data-centric company. Five years ago, Intel’s revenues were about a third data-centric, and now the sector is close to half the business.

But AI isn’t a business that Intel dominates yet. In its market assessments, Intel figures it has 20 percent of the market share in key categories. In 2017, just $1 billion of Intel’s Xeon processor business was related to AI.

“Intel must be feeling pretty confident to put out that AI number like that,” said Patrick Moorhead, analyst at Moor Insights & Strategy, in an interview. “Everyone will be asking that in the future. You never do that unless you are confident about the future.”

Rivals such as graphics chip maker Nvidia and a host of startups are also chasing the same market. In that respect, Intel may very well be the underdog in this market, as traditional central processing units aren’t necessarily the best for AI processing, said Linley Gwennap, analyst at the Linley Group, in an interview.

But the company is pouring a lot of its attention into AI chips now. Shenoy said the latest Cascade Lake Xeon processor coming later this year will be 11 times better at AI image recognition tasks than the Silver Lake Xeon from the previous generation in 2017.

“We believe data defines the future our industry and the future of Intel,” Shenoy said. “Ninety percent of the world’s data has been created in the last two years, and only 1 percent of it is being analyzed and used for real business value. We are in the golden age of data.”

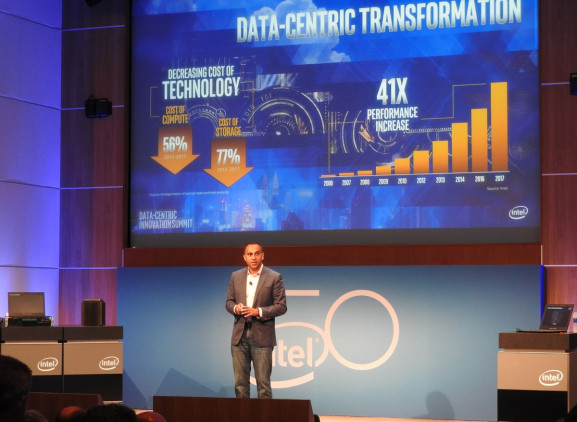

The success of AI software is being driven in part by Moore’s law advances. The cost of computing is down 56 percent since 2010, the cost of storage has dropped 77 percent in the same time frame, and performance increased by 41 times from 2006 to 2017.

But huge tasks lie ahead as Intel moves into the world of self-driving cars. Creating maps for those cars requires cameras that capture and deliver data in real time. Self-driving cars can generate 4 terabytes of data per hour. That has to be sent to the cloud, analyzed in the data center, and used to generate real-time maps that are then sent back to the cars. In a relatively short time, Intel estimates that it will be using data from 2 million cars on the road to crowdsource data for maps at scale.

That means that AI is going to have to infuse everything, from in-vehicle chips to edge devices to cloud computing in an end-to-end platform, Shenoy said. The total available market for Intel from the AI market is worth $200 billion by 2022, up from an estimate last year that pegged it at $160 billion.

[VentureBeat’s executive-level Transform event, near SF on August 21 & 22 is all about how to harness AI to grow your business.]

“After 50 years, this is the biggest opportunity for the company,” he said. “We have 20 percent of this market today.”

For AI chips in particular, the market opportunity will grow 30 percent a year, from $2.5 billion in 2017 to $8 billion to $10 billion by 2022, Shenoy said. That’s why AI is becoming a huge part of Intel’s future products investment.

“Our strategy is to drive a new era of data center technology,” Shenoy said.

Moorhead, the analyst, said the market size estimates made sense.

“The overall TAM numbers pass the smell test,” Moorhead said. “It shows Intel does have a lot of growth potential even though they have 98 percent market share in the data center.”

To tackle part of that problem, Intel recently hired Jim Keller, a renowned chip experts who architected important chips for everyone from Tesla to Apple. He said that making contributions to “change the world faster” is part of the reason he came on board at Intel.

“I like Ray Kurzweil’s line that the future is the future accelerating,” Keller said. “The next 25 years will be bigger than the last 25. The AI revolution is really big. It impacts the client, data center, graphics.”

Part of Keller’s responsibility is to look across all of Intel’s intellectual properties — including x86, Intel Architecture, and some of the acquired architectures — and then assess the best architecture to use in particular situations. How Intel applies these different Intel-owned architectures will determine how competitive it will be in AI, said Naveen Rao, corporate vice president at Intel’s AI products group, in an interview.

Shenoy added more depth to Intel’s roadmap today. He said Cascade Lake is a future Intel Xeon Scalable processor based on 14-nanometer manufacturing technology that will support Intel Optane DC persistent memory and a set of new AI features called Intel DL Boost.

This embedded AI accelerator will speed deep learning inference workloads, with an expected 11 times faster image recognition than the current generation Intel Xeon Scalable (Skylake circa 2017) processors. Cascade Lake will ship late this year.

“It’s very hard at this point to see how Intel competes with Nvidia on AI training,” Moorhead said. “There wasn’t any information provided on that. We’ll look at the comparison of Nvidia’s P40 on inferencing compared to Cascade Lake.”

The new addition to the roadmap is Cooper Lake, a new Xeon Scalable chip also based on 14-nanometer manufacturing. Cooper Lake will introduce a new generation platform with better performance, new input-output features, instruction improvements dubbed Intel DL Boost, and Optane support. Cooper Lake will debut in 2019.

Lastly, Intel’s Ice Lake is a future Intel Xeon Scalable processor based on 10-nanometer manufacturing technology. It shares Cooper Lake features and it is planned for 2020 shipments.

Since Ice Lake is a couple of years late, Moorhead said customers will be happy to get an option for a new Xeon chip in 2019. But he said it would be a tough decision as to whether they should wait for Ice Lake or adopt Cooper Lake earlier.

Rao said that the diversity of processing needs will vary from the client to the data center, and Intel recognizes that “one size does not fit all.” That’s why it has different solutions in the works from acquisitions such as Movidius, Mobileye, and Nervana. In 2019, a new Intel Nervana chip will have three to four times the training performance versus the first-generation chip.

“We are positioned to play in all segments,” Rao said. “This growth is just beginning. It feels like we are in the top of the second inning.”

- On a:On one has no

- Next: Apple's vice preside

Similar news

- Won The Best Partner of Executive Search Consultant2016-12-24

- WiseLeed Won 2014 Double Best Awards of Executive Search from Wanda Group2014-12-30

- Apple's vice president quit any technical Adobe CTO Kevin2014-07-29

- The former Opel CEO Karl Join Magna Steyr2014-07-29

- Ctrip founder James Liang comeback as CEO2014-07-29

- Tang Ren Xinhua Du CEO resigned2014-07-29

- Appointed vice president of Carlson Hotels2014-07-29

- Facebook vice president quit London's2014-07-29